As virtual assistants end up being common, users progressively communicate with them to find out about brand-new subjects or get suggestions and anticipate them to provide abilities beyond narrow discussions of a couple of turns. Dynamic preparation, specifically the ability to look ahead and replan based upon the circulation of the discussion, is a necessary component for the making of engaging discussions with the much deeper, open-ended interactions that users anticipate.

While big language designs (LLMs) are now beating advanced techniques in lots of natural language processing standards, they are generally trained to output the next finest action, instead of preparing ahead, which is needed for multi-turn interactions. Nevertheless, in the previous couple of years, support knowing (RL) has actually provided unbelievable outcomes attending to particular issues that include vibrant preparation, such as winning video games and protein folding.

Today, we are sharing our current advances in vibrant preparation for human-to-assistant discussions, in which we make it possible for an assistant to prepare a multi-turn discussion towards an objective and adjust that strategy in real-time by embracing an RL-based technique. Here we take a look at how to enhance long interactions by using RL to make up responses based upon details drawn out from trustworthy sources, instead of counting on material produced by a language design. We anticipate that future variations of this work might integrate LLMs and RL in multi-turn discussions. The release of RL “in the wild” in a massive discussion system showed a powerful difficulty due to the modeling intricacy, greatly big state and action areas, and considerable subtlety in creating benefit functions.

What is vibrant preparation?

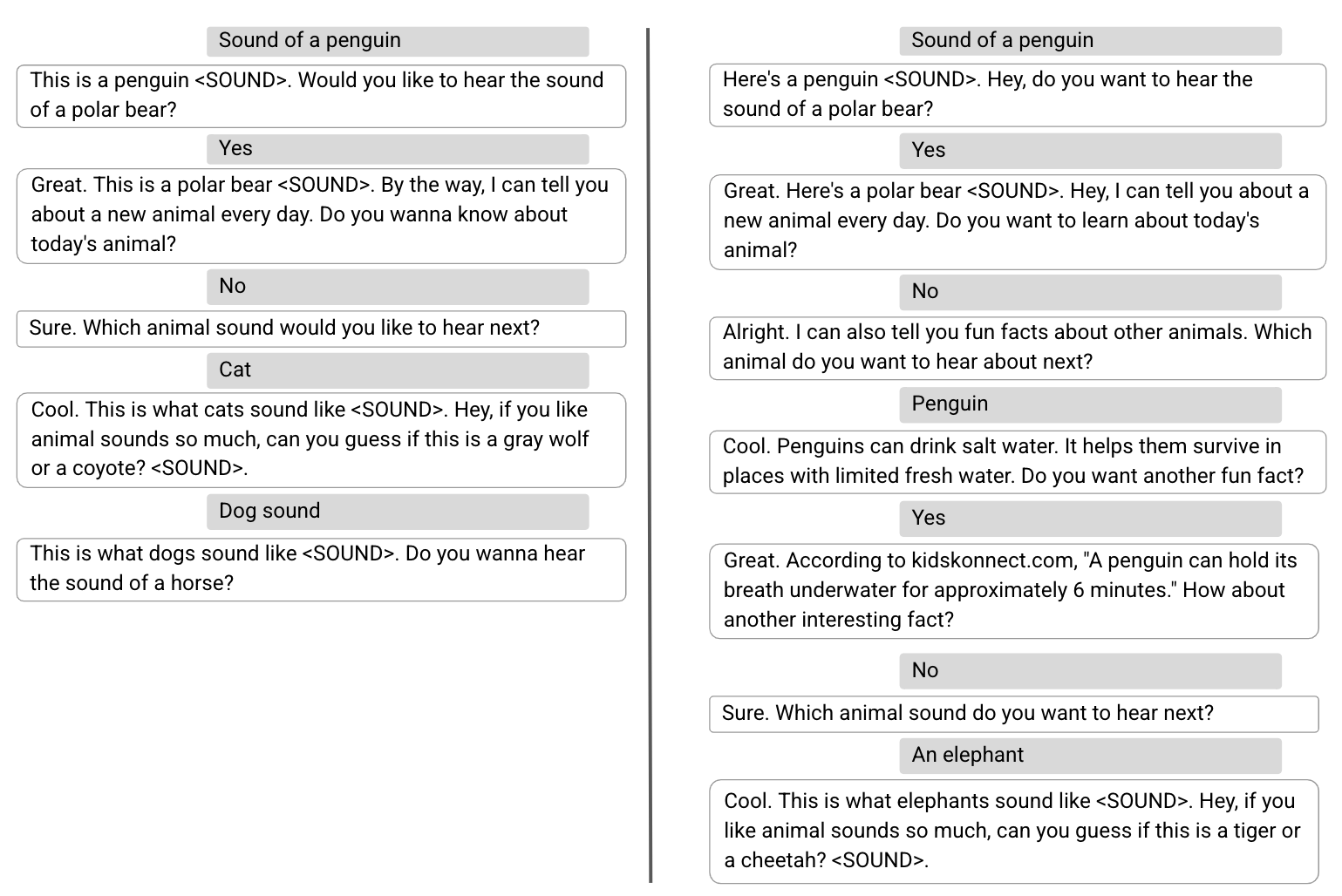

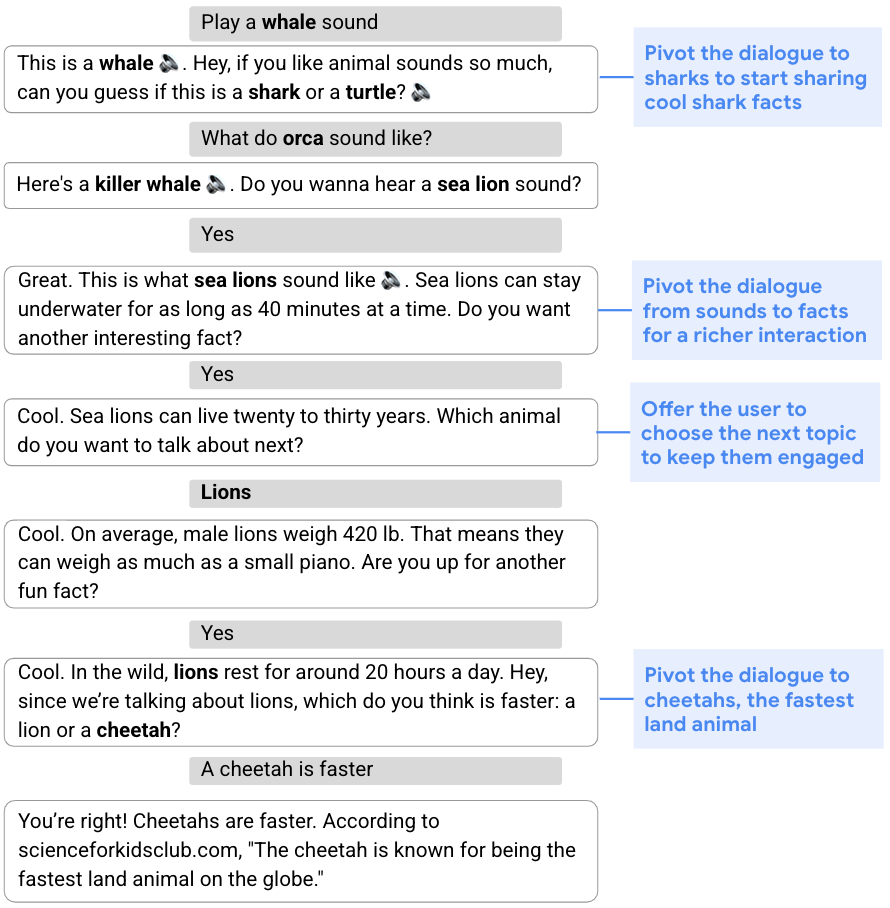

Lots of kinds of discussions, from collecting details to using suggestions, need a versatile technique and the capability to customize the initial prepare for the discussion based upon its circulation. This capability to move equipments in the middle of a discussion is referred to as vibrant preparation, rather than fixed preparation, which describes a more set technique. In the discussion listed below, for instance, the objective is to engage the user by sharing intriguing truths about cool animals. To start, the assistant guides the discussion to sharks by means of a sound test. Offered the user’s absence of interest in sharks, the assistant then establishes an upgraded strategy and rotates the discussion to sea lions, lions, and after that cheetahs.

|

| The assistant dynamically customizes its initial strategy to speak about sharks and shares truths about other animals. |

Dynamic structure

To handle the difficulty of conversational expedition, we separate the generation of assistant actions into 2 parts: 1) material generation, which draws out pertinent details from trustworthy sources, and 2) versatile structure of such material into assistant actions. We describe this two-part technique as vibrant structure Unlike LLM approaches, this technique provides the assistant the capability to completely manage the source, accuracy, and quality of the material that it might use. At the exact same time, it can accomplish versatility by means of a discovered discussion supervisor that picks and integrates the most suitable material.

In an earlier paper, “ Dynamic Structure for Conversational Domain Expedition“, we explain an unique technique which includes: (1) a collection of material suppliers, which use prospects from various sources, such as news bits, understanding chart truths, and concerns; (2) a discussion supervisor; and (3) a sentence combination module. Each assistant action is incrementally built by the discussion supervisor, which picks prospects proposed by the material suppliers. The chosen series of utterances is then merged into a cohesive action.

Dynamic preparation utilizing RL

At the core of the assistant action structure loop is a discussion supervisor trained utilizing off-policy RL, specifically an algorithm that assesses and enhances a policy that is various from the policy utilized by the representative (in our case, the latter is based upon a monitored design). Using RL to discussion management provides numerous obstacles, consisting of a big state area (as the state represents the discussion state, which requires to represent the entire discussion history) and an efficiently unbounded action area (that might consist of all existing words or sentences in natural language).

We deal with these obstacles utilizing an unique RL building. Initially, we take advantage of effective monitored designs– particularly, persistent neural networks (RNNs) and transformers— to supply a concise and efficient discussion state representation. These state encoders are fed with the discussion history, made up of a series of user and assistant turns, and output a representation of the discussion state in the kind of a hidden vector

Second, we utilize the truth that a fairly little set of affordable prospect utterances or actions can be produced by material suppliers at each discussion turn, and restrict the action area to these. Whereas the action area is generally repaired in RL settings, due to the fact that all states share the exact same action area, ours is a non-standard area in which the prospect actions might vary with each state, because material suppliers create various actions depending upon the discussion context. This puts us in the world of stochastic action sets, a structure that formalizes cases where the set of actions offered in each state is governed by an exogenous stochastic procedure, which we deal with utilizing Stochastic Action Q-Learning, a variation of the Q-learning technique. Q-learning is a popular off-policy RL algorithm, which does not need a design of the environment to assess and enhance the policy. We trained our design on a corpus of crowd-compute– ranked discussions acquired utilizing a monitored discussion supervisor.

Support knowing design examination

We compared our RL discussion supervisor with an introduced monitored transformer design in an experiment utilizing Google Assistant, which spoke with users about animals. A discussion begins when a user activates the experience by asking an animal-related question (e.g., “How does a lion noise?”). The experiment was performed utilizing an A/B screening procedure, in which a little portion of Assistant users were arbitrarily tested to communicate with our RL-based assistant while other users engaged with the basic assistant.

We discovered that the RL discussion supervisor performs longer, more appealing discussions. It increases discussion length by 30% while enhancing user engagement metrics. We see a boost of 8% in cooperative actions to the assistant’s concerns– e.g., “Inform me about lions,” in action to “Which animal do you wish to find out about next?” Although there is likewise a big boost in nominally “non-cooperative” actions (e.g., “No,” as a reply to a concern proposing extra material, such as “Do you wish to hear more?”), this is anticipated as the RL representative takes more dangers by asking rotating concerns. While a user might not have an interest in the conversational instructions proposed by the assistant (e.g., rotating to another animal), the user will typically continue to participate in a discussion about animals.

In addition, some user questions consist of specific favorable (e.g., “Thank you, Google,” or “I enjoy.”) or unfavorable (e.g., “Stop talking,” or “Stop.”) feedback. While an order of magnitude less than other questions, they use a direct procedure of user (dis) complete satisfaction. The RL design increases specific favorable feedback by 32% and minimizes unfavorable feedback by 18%.

Discovered vibrant preparation qualities and techniques

We observe numerous qualities of the (hidden) RL strategy to enhance user engagement while performing longer discussions. Initially, the RL-based assistant ends 20% more turns in concerns, triggering the user to select extra material. It likewise much better utilizes material variety, consisting of truths, sounds, quizzes, yes/no concerns, open concerns, and so on. Usually, the RL assistant utilizes 26% more unique material suppliers per discussion than the monitored design.

2 observed RL preparation techniques relate to the presence of sub-dialogues with various qualities. Sub-dialogues about animal noises are poorer in material and display entity rotating at every turn (i.e., after playing the noise of an offered animal, we can either recommend the noise of a various animal or test the user about other animal noises). On the other hand, sub-dialogues including animal truths generally consist of richer material and have higher discussion depth. We observe that RL prefers the richer experience of the latter, choosing 31% more fact-related material. Last but not least, when limiting analysis to fact-related discussions, the RL assistant shows 60% more focus-pivoting turns, that is, conversational turns that alter the focus of the discussion.

Listed below, we reveal 2 example discussions, one performed by the monitored design (left) and the 2nd by the RL design (right), in which the very first 3 user turns equal. With a monitored discussion supervisor, after the user decreased to find out about “today’s animal”, the assistant rotates back to animal sounds to optimize the instant user complete satisfaction. While the discussion performed by the RL design starts identically, it shows a various preparation technique to enhance the total user engagement, presenting more varied material, such as enjoyable truths.

Future research study and obstacles

In the previous couple of years, LLMs trained for language understanding and generation have actually shown excellent outcomes throughout numerous jobs, consisting of discussion. We are now checking out making use of an RL structure to empower LLMs with the ability of vibrant preparation so that they can dynamically prepare ahead and pleasure users with a more appealing experience.

Recognitions

The work explained is co-authored by: Moonkyung Ryu, Yinlam Chow, Orgad Keller, Ido Greenberg, Avinatan Hassidim, Michael Fink, Yossi Matias, Idan Szpektor and Gal Elidan. We wish to thank: Roee Aharoni, Moran Ambar, John Anderson, Ido Cohn, Mohammad Ghavamzadeh, Lotem Golany, Ziv Hodak, Adva Levin, Fernando Pereira, Shimi Salant, Shachar Shimoni, Ronit Slyper, Ariel Stolovich, Hagai Taitelbaum, Noam Velan, Avital Zipori and the CrowdCompute group led by Ashwin Kakarla. We thank Sophie Allweis for her feedback on this blogpost and Tom Small for the visualization.