Database performance is a critical aspect of ensuring a web application or service remains fast and stable. As the service scales up, there are often challenges with scaling the primary database along with it. While MongoDB is often used as a primary online database and can meet the demands of very large scale web applications, it does often become the bottleneck as well.

I had the opportunity to operate MongoDB at scale as a primary database at Foursquare, and encountered many of these bottlenecks. It can often be the case when using MongoDB as a primary online database for a heavily trafficked web application that access patterns such as joins, aggregations, and analytical queries that scan large or entire portions of a collection cannot be run due to the adverse affects they have on performance. However, these access patterns are still required to build many application features.

We devised many strategies to deal with these situations at Foursquare. The main strategy to alleviate some of the pressure on the primary database is to offload some of the work to a secondary data store, and I will share some of the common patterns of this strategy in this blog series. In this blog we will just continue to only use MongoDB, but split up the work from a single cluster to multiple clusters. In future articles I will discuss offloading to other types of systems.

Use Multiple MongoDB Clusters

One way to get more predictable performance and isolate the impacts of querying one collection from another is to split them into separate MongoDB clusters. If you are already using service oriented architecture, it may make sense to also create separate MongoDB clusters for each major service or group of services. This way you can minimize the impact of an incident to a MongoDB cluster to just the services that need to access it. If all of your microservices share the same MongoDB backend, then they are not truly independent of each other.

Obviously if there is new development you can choose to start any new collections on a brand new cluster. However you can also decide to move work currently done by existing clusters to new clusters by either just migrating a collection wholesale to another cluster, or creating new denormalized collections in a new cluster.

Migrating a Collection

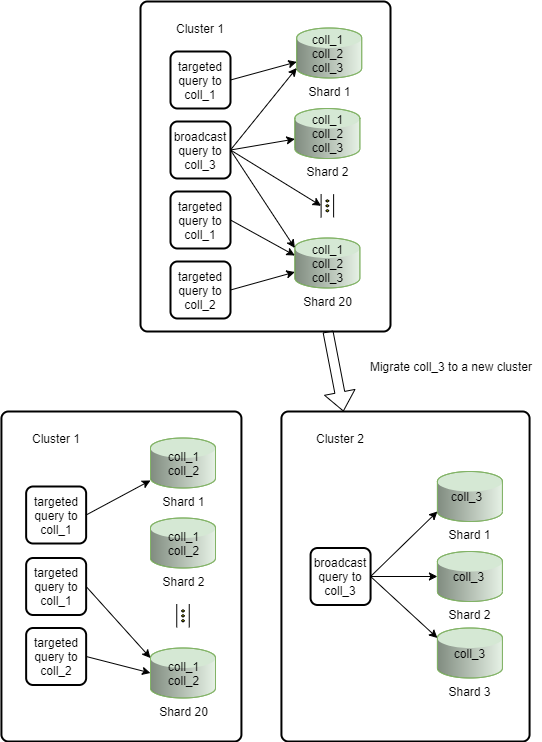

The more similar the query patterns are for a particular cluster, the easier it is to optimize and predict its performance. If you have collections with very different workload characteristics, it may make sense to separate them into different clusters in order to better optimize cluster performance for each type of workload.

For example, you have a widely sharded cluster where most of the queries specify the shard key so they are targeted to a single shard. However, there is one collection where most of the queries do not specify the shard key, and thus result in being broadcast to all shards. Since this cluster is widely sharded, the work amplification of these broadcast queries becomes larger with every additional shard. It may make sense to move this collection to its own cluster with many fewer shards in order to isolate the load of the broadcast queries from the other collections on the original cluster. It is also very likely that the performance of the broadcast query will also improve by doing this as well. Lastly, by separating the disparate query patterns, it is easier to reason about the performance of the cluster since it is often not clear when looking at multiple slow query patterns which one causes the performance degradation on the cluster and which ones are slow because they are suffering from performance degradations on the cluster.

Denormalization

Denormalization can be used within a single cluster to reduce the number of reads your application needs to make to the database by embedding extra information into a document that is frequently requested with it, thus avoiding the need for joins. It can also be used to split work into a completely separate cluster by creating a brand new collection with aggregated data that frequently needs to be computed.

For example, if we have an application where users can make posts about certain topics, we might have three collections:

users:

{

_id: ObjectId('AAAA'),

name: 'Alice'

},

{

_id: ObjectId('BBBB'),

name: 'Bob'

}

topics:

{

_id: ObjectId('CCCC'),

name: 'cats'

},

{

_id: ObjectId('DDDD'),

name: 'dogs'

}

posts:

{

_id: ObjectId('PPPP'),

name: 'My first post - cats',

user: ObjectId('AAAA'),

topic: ObjectId('CCCC')

},

{

_id: ObjectId('QQQQ'),

name: 'My second post - dogs',

user: ObjectId('AAAA'),

topic: ObjectId('DDDD')

},

{

_id: ObjectId('RRRR'),

name: 'My first post about dogs',

user: ObjectId('BBBB'),

topic: ObjectId('DDDD')

},

{

_id: ObjectId('SSSS'),

name: 'My second post about dogs',

user: ObjectId('BBBB'),

topic: ObjectId('DDDD')

}

Your application may want to know how many posts a user has ever made about a certain topic. If these are the only collections available, you would have to run a count on the posts collection filtering by user and topic. This would require you to have an index like {'topic': 1, 'user': 1} in order to perform well. Even with the existence of this index, MongoDB would still need to do an index scan of all the posts made by a user for a topic. In order to mitigate this, we can create a new collection user_topic_aggregation:

user_topic_aggregation:

{

_id: ObjectId('TTTT'),

user: ObjectId('AAAA'),

topic: ObjectId('CCCC')

post_count: 1,

last_post: ObjectId('PPPP')

},

{

_id: ObjectId('UUUU'),

user: ObjectId('AAAA'),

topic: ObjectId('DDDD')

post_count: 1,

last_post: ObjectId('QQQQ')

},

{

_id: ObjectId('VVVV'),

user: ObjectId('BBBB'),

topic: ObjectId('DDDD')

post_count: 2,

last_post: ObjectId('SSSS')

}

This collection would have an index {'topic': 1, 'user': 1}. Then we would be able to get the number of posts made by a user for a given topic with scanning only 1 key in an index. This new collection can then also live in a completely separate MongoDB cluster, which isolates this workload from your original cluster.

What if we also wanted to know the last time a user made a post for a certain topic? This is a query that MongoDB struggles to answer. You can make use of the new aggregation collection and store the ObjectId of the last post for a given user/topic edge, which then lets you easily find the answer by running the ObjectId.getTimestamp() function on the ObjectId of the last post.

The tradeoff to doing this is that when making a new post, you need to update two collections instead of one, and it cannot be done in a single atomic operation. This also means the denormalized data in the aggregation collection can become inconsistent with the data in the original two collections. There would then need to be a mechanism to detect and correct these inconsistencies.

It only makes sense to denormalize data like this if the ratio of reads to updates is high, and it is acceptable for your application to sometimes read inconsistent data. If you will be reading the denormalized data frequently, but updating it much less frequently, then it makes sense to incur the cost of more expensive and complex updates.

Summary

As your usage of your primary MongoDB cluster grows, carefully splitting the workload among multiple MongoDB clusters can help you overcome scaling bottlenecks. It can help isolate your microservices from database failures, and also improve performance of queries of disparate patterns. In subsequent blogs, I will talk about using systems other than MongoDB as secondary data stores to enable query patterns that are not possible to run on your primary MongoDB cluster(s).

Other MongoDB resources: